Oracle Business Intelligence (BI) Analytical Applications

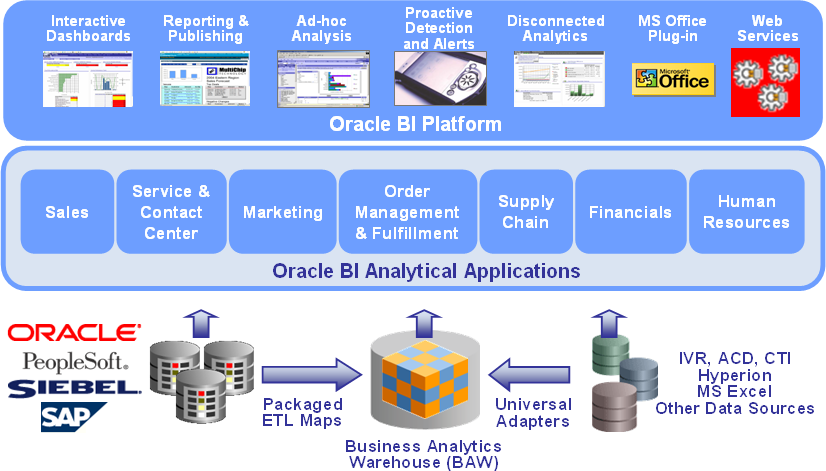

- Rather than just being a platform or development environment, Oracle Business Intelligence (BI) Analytical Applications are fully inclusive business intelligence solutions that incorporate all of the key metrics, workflows, and business processes for a particular business function. Bundled within theses solutions are numerous pre-built components including:

- • Dashboards

- • Metrics

- • Reports

- • Drill-down paths

- • Dimensional models

- • Naming standards

- • Database objects

- • ETL routines

- • Metadata

- • Security

In addition, Oracle BI Analytical Applications contain universal adapters that allow for rapid integration and direct connections with leading commercial-off-the shelf (COTS) packages including SAP, Oracle E-Business Suite, PeopleSoft, and Siebel applications systems.

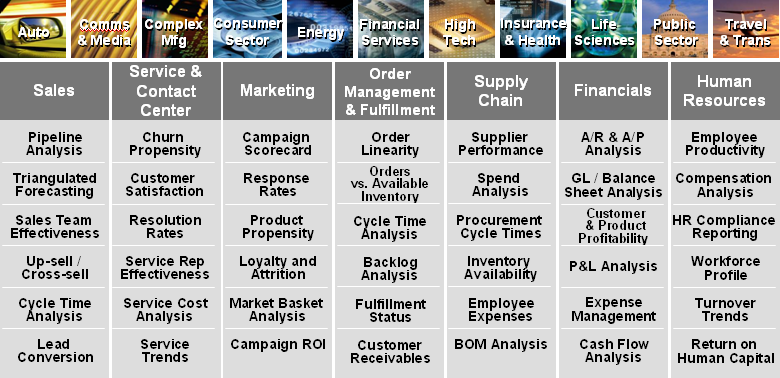

Oracle BI Analytical Applications come bundled with best practices and industry standards built-in. Additionally, they include all of the functionality required to conduct business intelligence for many common business functions including financials, human resources, sales, service, contact centers, marketing, supply chains, order management and fulfillment business areas.

Fundamentally, Oracle BI Analytical Applications are built upon the Oracle BI Platform and provide complete end-to-end, prebuilt business intelligence solutions that deliver intuitive, role-based intelligence to all members of an organization including senior executives, mid-level managers, and front-line employees. So rather than developing custom business intelligence solutions for each business area and function, the use of Oracle BI Analytical Applications allows an organization the ability to rapidly configure a ready-built solution utilizing the complete Oracle BI Platform.

- Oracle BI Analytical Applications come bundled with two main additional pre-built back-end repositories:

- • Business Analytics Warehouse

- • ETL (Extract-Transform-Load) Repository

The Business Analytics Warehouse (BAW) is a completely pre-built data warehouse that physically contains all of necessary dimension and fact table needed for the business intelligence applications. The BAW is fully-compliant with the dimensional modeling methodology developed by Ralph Kimball and supports many advanced techniques including slowly changing dimensions, conformed dimensions, aggregate tables, hierarchy tables, and surrogate keys.

The ETL repository includes all of the routines for extracting of data to a staging area, transforming the data into a common format, the loading of date into data warehouse tables, changed data capture, and seeding data for common dimensions. In addition, the powerful ETL repository consist of two main components, Informatica which is the ETL engine that contains the data integration routines, and the DAC (Data Warehouse Application Console) which is the “ETL orchestration tool” that controls application configuration, execution & recovery, and monitoring.